|

| Recent Posts | - May, 2025-5,(1)

- April, 2025-4,(1)

- January, 2025-1,(1)

- July, 2024-7,(1)

- May, 2024-5,(2)

- May, 2023-5,(1)

- February, 2023-2,(1)

- November, 2022-11,(1)

- July, 2022-7,(2)

- March, 2022-3,(1)

- November, 2021-11,(2)

- August, 2021-8,(2)

- July, 2021-7,(2)

- June, 2021-6,(1)

- May, 2021-5,(1)

- March, 2021-3,(1)

- February, 2021-2,(2)

- January, 2021-1,(7)

- December, 2020-12,(3)

- March, 2020-3,(2)

- February, 2020-2,(1)

- December, 2019-12,(2)

- November, 2019-11,(1)

- October, 2019-10,(1)

- September, 2019-9,(1)

- August, 2019-8,(1)

- May, 2019-5,(1)

- April, 2019-4,(2)

- March, 2019-3,(2)

- December, 2018-12,(1)

- November, 2018-11,(4)

- July, 2018-7,(1)

- May, 2018-5,(3)

- April, 2018-4,(2)

- February, 2018-2,(3)

- January, 2018-1,(3)

- November, 2017-11,(2)

- August, 2017-8,(1)

- June, 2017-6,(3)

- May, 2017-5,(5)

- February, 2017-2,(1)

- December, 2016-12,(1)

- October, 2016-10,(2)

- September, 2016-9,(1)

- August, 2016-8,(1)

- July, 2016-7,(1)

- March, 2016-3,(2)

- February, 2016-2,(3)

- December, 2015-12,(5)

- November, 2015-11,(5)

- September, 2015-9,(1)

- August, 2015-8,(2)

- July, 2015-7,(1)

- March, 2015-3,(2)

- February, 2015-2,(1)

- December, 2014-12,(4)

- July, 2014-7,(2)

- June, 2014-6,(2)

- May, 2014-5,(3)

- April, 2014-4,(3)

- March, 2014-3,(1)

- December, 2013-12,(2)

- November, 2013-11,(1)

- July, 2013-7,(1)

- June, 2013-6,(2)

- May, 2013-5,(1)

- March, 2013-3,(3)

- February, 2013-2,(3)

- January, 2013-1,(1)

- December, 2012-12,(3)

- November, 2012-11,(1)

- October, 2012-10,(1)

- September, 2012-9,(1)

- August, 2012-8,(1)

- July, 2012-7,(6)

- June, 2012-6,(1)

- April, 2012-4,(1)

- March, 2012-3,(3)

- February, 2012-2,(3)

- January, 2012-1,(4)

- December, 2011-12,(3)

- October, 2011-10,(3)

- September, 2011-9,(1)

- August, 2011-8,(10)

- July, 2011-7,(2)

- June, 2011-6,(7)

- March, 2011-3,(2)

- February, 2011-2,(3)

- January, 2011-1,(1)

- September, 2010-9,(1)

- August, 2010-8,(2)

- June, 2010-6,(1)

- May, 2010-5,(1)

- April, 2010-4,(3)

- March, 2010-3,(2)

- February, 2010-2,(3)

- January, 2010-1,(1)

- December, 2009-12,(3)

- November, 2009-11,(3)

- October, 2009-10,(2)

- September, 2009-9,(5)

- August, 2009-8,(3)

- July, 2009-7,(9)

- June, 2009-6,(2)

- May, 2009-5,(2)

- April, 2009-4,(9)

- March, 2009-3,(6)

- February, 2009-2,(4)

- January, 2009-1,(10)

- December, 2008-12,(5)

- November, 2008-11,(5)

- October, 2008-10,(13)

- September, 2008-9,(10)

- August, 2008-8,(7)

- July, 2008-7,(8)

- June, 2008-6,(12)

- May, 2008-5,(14)

- April, 2008-4,(12)

- March, 2008-3,(17)

- February, 2008-2,(10)

- January, 2008-1,(16)

- December, 2007-12,(6)

- November, 2007-11,(4)

|

|

|

|

Blog posts for the month of month,2008.

|

|

|

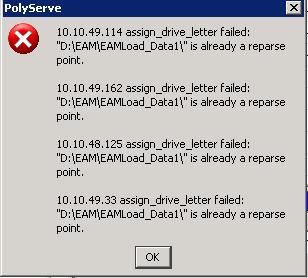

| Assign_Drive_Letter Failed is already a reparse point | 8/17/2008 3:42:27 PM |

I love patching clustered servers (not!), and patching polyserve is usually quite simple and not an issue. I'm not referring to Polyserve patches, but Operating System (microsoft, hba, other drivers etc) patches.

We normally have designed a special "rolling" sequential patch of 15 minute intervals for the clustered servers, trying to give each server enough time to recover before the next one rolls.

Something happenned with that sequence, and things rolled like a ping-pong ! Polyserve stabilized, but there was an issue with two instances. Review of the issue determined that the mount points that these instances rely on, did not happen, meaning the mounts failed.

I attempted to remount the volumes and received the following error, "10.10.49.114 assign_drive_letter failed: "D:\Mounts\SysData\" is already a reparse point.".

I'm not really sure why this happenned, meaning windows, polyserve, san related root cause. I attempted to reboot the nodes that these failed on and recieved the same error, hey a reboot fixes everything under windoze, right ?

I found the only way to correct this was to find the folder where these "reparse points" (junction point or mount point) stem from and to completely delete that folder. So in the above example I had to delete the folder eamload_data1 under eam (remember to do this on each node that has a problem). I then assigned the mount point as normal, and all the instances worked fine.

I've seen sporadic issues with mount points in the past, polyserve and non-polyserve. See a previous point here on how to remove a ghosted mount point...

http://www.lifeasbob.com/2008/03/25/ManuallyRemoveAMountPoint.aspx

It'll be interesting to see if this happens again or was a "one time" deal. |

| Assigning SQL Server a Static Port Number | 8/5/2008 8:45:40 AM |

Assigning SQL Server a static port number is necessary for many reasons, cluster, firewalls, security through obsecurity etc. We use Polyserve and often have to assign port numbers, we've never really had a good guide to follow on this, even the Polyserve documentation doesn't really have a white paper or a short paragraph on a port numbering strategy. After several years now of running static ports, one is definetly needed. Before you can create a strategy for your Polyserve environment or SQL Server, the below except is a great generic explanation about port numbering and uses.

This is from December 1999, but is just as relevant today. Source = http://www.microsoft.com/technet/archive/community/columns/inside/techan23.mspx?mfr=true

Most everything you ever wanted to know about TCP/IP Port Numbers

Port numbers are divided into three ranges: the Well-Known Ports, the Registered Ports, and the Dynamic and/or Private Ports. The Well-Known Ports are those from 0 through 1023.The Registered Ports are those from 1024 through 49151. The Dynamic and/or Private Ports are those from 49152 through 65535.

Well-Known Ports are assigned by Internet Assigned Numbers Authority (IANA) and should only be used by System Processes or by programs executed by privileged users. An example of this type of port is 80/TCP and 80/UDP. These ports are privileged and reserved for use by the HTTP protocol.

Registered Ports are listed by the IANA and on most systems can be used by ordinary user processes or programs executed by ordinary users. An example of this type of port is 1723/TCP and 1723/UDP. Although other processes can use these ports, they are generally accepted as the connection control port for Point To Point Tunneling Protocol (PPTP).

Dynamic or Private Ports can be used by any process or user, and are unrestricted.

IANA maintains a list of ports on their Web site (http://www.isi.edu/in-notes/iana/assignments/port-numbers)

|

| Get Your Winter Brew on... | 8/9/2008 9:15:20 AM |

Summer is still in full swing, but we've had a day or two of nice weather. A quick check of the brewing calendar shows it's time to get those Heavy winter brews started.

I'm working on two of my favorites this weekend:

- Hard Red Winter Wheat

- Bus Oil

Hard Red Winter Wheat is a Belgian Abbey, styled after a trip through Kansas City's Board of Trade. On the monitor were futures for Hard Red Winter Wheat, hence I designed this beer around that name.

Bus Oil is a Strong Porter, finished with Vanilla Bean for a very subtle, silky smooth black brew.

Both of these are very strong, perfect for winter and both require a good 2-3 months to reach peak, so "get your winter brew on", now... |

| Heather Ale (Braggot) | 8/13/2008 9:58:28 AM |

Finally got around to tasting the Heather Ale, it's a fine line between Braggot and Ale, but after tasting this, it is definetly an Ale.

The beer is definetly unique. It pours nicely, quickly developing a very thick creamy head. First impression is the nose, wow, the combination of Heather and some of the "bee items" (propolis and pollen) creates a cross of freshly crushed pine needles.

The beer takes a bit too drink, it is not a light beer and has some attitude. One or two bottles of this and things can look right with the world. I'm quite pleased with the balance and flavors of the beer, which is always a challenge with "big" alcohol heavy beers. Definetly the dominating profile is the nose from the heather and "bee items".

I want to brew this again, but will definetly cut back on the Bee Pollen and Propolis to 1 or 2 ounces from 8. Also the Heather Honey from Scotland costs a fortune ($80 for 1/2 gallon), so I'm going to have to find a reasonable subsititute. Though in my mind the comination of the Heather Honey and Heather tips is what makes this "Heather Ale". I may try a substitute and compare them. I currently have a 1/2 gallon of Meadow Foam Honey, which is also some expensive and rare stuff, wonder how it will brew up...Link to original post on creating Heather Ale.

The beer has only been aged 6 months in the bottle (feb 08), having been brewed in Nov 07. These honey based ales (braggot), take several months to brew and can easily improve with age.

It'll be interesting to taste over the next year.

|

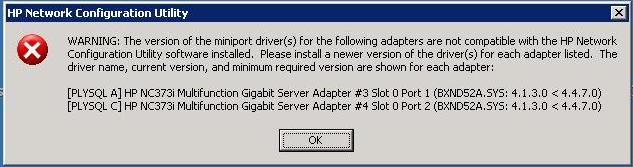

| HP PSP Upgrade, causes Miniport driver error | 8/12/2008 9:10:44 AM |

Recently we upgraded the Proliant Support Pack (PSP) to higher version (8.10.0.0), and then we began to recieve the following error from the HP Network configuration Utility:

WARNING: The version of the miniport driver(s) for the following adapters are not compatible with the HP Network Configuration Utility software installed. Please install a newer version of the driver(s) for each adapter listed. The driver name, current version, and minimum required version are shown for each adapter:

Don't forget to upgrade your other software after upgrading the Proliant Support Pack. |

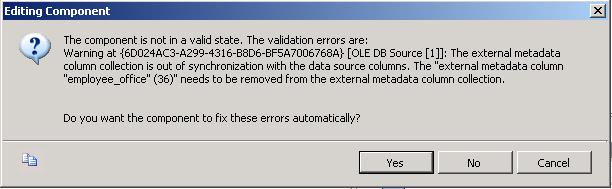

| SSIS Stored Procedure Metadata | 8/15/2008 12:56:35 PM |

Working with SSIS, a stored procedure was recently changed from a simple select statement to include some more procedural statements that utilized temporary tables. Surprisingly the SSIS package stopped working, even though the result set from the stored procedure remained the same. One of the reasons to use the stored procedure in ssis, was to avoid embedding the login in the SSIS packages related to data access, what gives ?

Error is:

The component is not in a valid state. The validation errors are:

Warning at {6D024AC3-A299-4316-B8D6-BF5A7006768A} [OLE DB Source [1]]: The external metadata column collection is out of synchronization with the data source columns. The "external metadata column "employee_office" (36)" needs to be removed from the external metadata column collection.

Do you want the component to fix these errors automatically?

Some Quick research indicates this error is exactly what it says, metadata. SSIS needs to know the metadata, for stored procedures, using an ole db data source, it does this by querying the first statement in the stored procedure, if the first statements are temporary tables and not a select statement , you've got the problem. I've seen instances where temporary variables are ok and don't cause the problem.

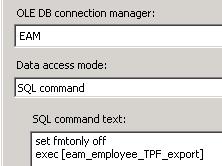

I've seen several solutions to this problem using "set fmtonly off" in the SSIS call and "set nocount on" in the stored procedure. While "set nocount on" is usually recommended, i'm not too pleased with the "set fmtonly off" solution, as I've read issues where this may or may not work and cause performance issues.

There seem to be several different ways to fix this, each of them slightly different, my preference is option 3.

1. Put "set nocount on" in the stored procedure, put "set fmtonly off" before the execution of the procedure [in the ssis call].

2. re-write the stored procedure to use table variables instead of temporary tables, a good solution as well.

3. Add some meta-data and the "set nocount on" to the stored procedure with a "short circuited if clause" (if 1=0), and a bogus select statement at the top. I've tested with trying to leave the "set nocount on" out and it did not work.

CREATE PROCEDURE [dbo].[GenMetadata]

AS

SET NOCOUNT ON

IF 1 = 0

BEGIN

-- Publish metadata

SELECT CAST(NULL AS INT) AS id,

CAST(NULL AS NCHAR(10)) AS [Name],

CAST(NULL AS NCHAR(10)) AS SirName

END

-- Do real work starting here

CREATE TABLE #test

(

[id] [int] NULL,

[Name] [nchar](10) NULL,

[SirName] [nchar](10) NULL

)

References:

http://www.delphi.co.za/PermaLink,guid,56a56e23-d9d5-44dd-9f0b-0f8b80d0b462.aspx

http://forums.microsoft.com/MSDN/ShowPost.aspx?PostID=572199&SiteID=1

http://scotta-businessintelligence.blogspot.com/2007/05/ssis-ole-db-source-component-stored.html

http://blogs.conchango.com/jamiethomson/archive/2006/12/20/SSIS_3A00_-Using-stored-procedures-inside-an-OLE-DB-Source-component.aspx

|

| Verify Block Size on NTFS | 8/5/2008 3:37:25 PM |

Verify your block size, the default in windows is 4096.

The debate always is what is the optimal block size, I prefer a block size of 64K for NTFS Volumes.

You need to fsinfo to verify this, you can see below this d drive is at 4096, the default, Microsoft recommends a block size of 8K or greater, for volumes hosting sql server databases (the os is fine at 4096). Block Sizes smaller than 8K may lead to data corruption.

fsutil fsinfo ntfsinfo f

c:\>fsutil fsinfo ntfsinfo d:

NTFS Volume Serial Number : 0x60f2135af213342e

Version : 3.1

Number Sectors : 0x000000000237efff

Total Clusters : 0x000000000046fdff

Free Clusters : 0x000000000046bcfa

Total Reserved : 0x0000000000000000

Bytes Per Sector : 512

Bytes Per Cluster : 4096

Bytes Per FileRecord Segment : 1024

Clusters Per FileRecord Segment : 0

Mft Valid Data Length : 0x0000000000008000

Mft Start Lcn : 0x00000000000c0000

Mft2 Start Lcn : 0x0000000000237eff

Mft Zone Start : 0x00000000000c0000

Mft Zone End : 0x000000000014dfc0

|

|

Blog Home

|

|

|

|