|

| Recent Posts | - May, 2025-5,(1)

- April, 2025-4,(1)

- January, 2025-1,(1)

- July, 2024-7,(1)

- May, 2024-5,(2)

- May, 2023-5,(1)

- February, 2023-2,(1)

- November, 2022-11,(1)

- July, 2022-7,(2)

- March, 2022-3,(1)

- November, 2021-11,(2)

- August, 2021-8,(2)

- July, 2021-7,(2)

- June, 2021-6,(1)

- May, 2021-5,(1)

- March, 2021-3,(1)

- February, 2021-2,(2)

- January, 2021-1,(7)

- December, 2020-12,(3)

- March, 2020-3,(2)

- February, 2020-2,(1)

- December, 2019-12,(2)

- November, 2019-11,(1)

- October, 2019-10,(1)

- September, 2019-9,(1)

- August, 2019-8,(1)

- May, 2019-5,(1)

- April, 2019-4,(2)

- March, 2019-3,(2)

- December, 2018-12,(1)

- November, 2018-11,(4)

- July, 2018-7,(1)

- May, 2018-5,(3)

- April, 2018-4,(2)

- February, 2018-2,(3)

- January, 2018-1,(3)

- November, 2017-11,(2)

- August, 2017-8,(1)

- June, 2017-6,(3)

- May, 2017-5,(5)

- February, 2017-2,(1)

- December, 2016-12,(1)

- October, 2016-10,(2)

- September, 2016-9,(1)

- August, 2016-8,(1)

- July, 2016-7,(1)

- March, 2016-3,(2)

- February, 2016-2,(3)

- December, 2015-12,(5)

- November, 2015-11,(5)

- September, 2015-9,(1)

- August, 2015-8,(2)

- July, 2015-7,(1)

- March, 2015-3,(2)

- February, 2015-2,(1)

- December, 2014-12,(4)

- July, 2014-7,(2)

- June, 2014-6,(2)

- May, 2014-5,(3)

- April, 2014-4,(3)

- March, 2014-3,(1)

- December, 2013-12,(2)

- November, 2013-11,(1)

- July, 2013-7,(1)

- June, 2013-6,(2)

- May, 2013-5,(1)

- March, 2013-3,(3)

- February, 2013-2,(3)

- January, 2013-1,(1)

- December, 2012-12,(3)

- November, 2012-11,(1)

- October, 2012-10,(1)

- September, 2012-9,(1)

- August, 2012-8,(1)

- July, 2012-7,(6)

- June, 2012-6,(1)

- April, 2012-4,(1)

- March, 2012-3,(3)

- February, 2012-2,(3)

- January, 2012-1,(4)

- December, 2011-12,(3)

- October, 2011-10,(3)

- September, 2011-9,(1)

- August, 2011-8,(10)

- July, 2011-7,(2)

- June, 2011-6,(7)

- March, 2011-3,(2)

- February, 2011-2,(3)

- January, 2011-1,(1)

- September, 2010-9,(1)

- August, 2010-8,(2)

- June, 2010-6,(1)

- May, 2010-5,(1)

- April, 2010-4,(3)

- March, 2010-3,(2)

- February, 2010-2,(3)

- January, 2010-1,(1)

- December, 2009-12,(3)

- November, 2009-11,(3)

- October, 2009-10,(2)

- September, 2009-9,(5)

- August, 2009-8,(3)

- July, 2009-7,(9)

- June, 2009-6,(2)

- May, 2009-5,(2)

- April, 2009-4,(9)

- March, 2009-3,(6)

- February, 2009-2,(4)

- January, 2009-1,(10)

- December, 2008-12,(5)

- November, 2008-11,(5)

- October, 2008-10,(13)

- September, 2008-9,(10)

- August, 2008-8,(7)

- July, 2008-7,(8)

- June, 2008-6,(12)

- May, 2008-5,(14)

- April, 2008-4,(12)

- March, 2008-3,(17)

- February, 2008-2,(10)

- January, 2008-1,(16)

- December, 2007-12,(6)

- November, 2007-11,(4)

|

|

|

|

Blog posts for the month of month,2008.

|

|

|

| Appliance Delivery | 9/8/2008 3:00:50 PM |

Might as well have been deliverance...

Send the wife out to scope out a good deal for a new washer and dryer, emphasizing the need to be cheap and ensure it includes delivery and pickup of the old ones, i'm too old to be squabling over $50.00 and breaking my back lugging the crap up and down steps.

Great deal secured.

Deliverance, er... I mean delivery begins.

Quiet, delivery shows up at 8:30pm in the evening, right as rug-rats are about asleep. No problem, let the men get to work...take that back, let the man get back to work. Man is late 60's, wearing a knee brace / prosthesis thingy on his leg, teeters worse than my mother-in-law, and barely speaks.

Somewhere, as I'm watching this unfold, i'm imagining a Monty' python or Saturday night live skit. I'm guilted into helping, just because I can't take the site of this guy muscling around a washer and dryer, up a flight of steps, while I cooly enjoy a frosty mug. The guy's reluctant to let anyone help, as he thud, thud's and grunts his way through his work. I keep expecting the guy to drop dead any moment, i briefly wonder if i'm liable for that...

Finally as the task is almost complete, the sound of breaking glass...fortunately it's just my favorite ashtray out back, which has been around since my Marine Corps days, and was wide enough for my favorite cigars, graced the desks of Colonels, Master Gunny's and lowley Sergeants desk's (I remember when you could smoke at your desk!).

Fortunately the task is done.

|

| Change Default Schema for all users | 9/3/2008 10:46:40 AM |

Recently ran into an issue where the default schema for all users in a database needed to be changed to dbo. This was a database that was upgraded from sql server 2000 to 2005, of course a schema was created for each user. We quickly deleted the user schema's (as they weren't used), but for some reason the default schema for each user was left as their own. There was no quick way with the gui to change this, so I generated the following query which creates the command to alter the users as an output of column1, cut and past it into a new query window and execute.

SQL 2K5 Only.

Changes the default schema for all users.

Will generate a script in column 1, cut and paste to new query window.

Will fail for Windows groups.

SELECT

'Alter user [' + u.name + '] with default_schema = dbo' as command,

u.name AS [Name],

'Server[@Name=' + quotename(CAST(serverproperty(N'Servername') AS sysname),'''') + ']' + '/Database[@Name=' + quotename(db_name(),'''') + ']' + '/User[@Name=' + quotename(u.name,'''') + ']' AS [Urn],

u.principal_id AS [ID],

CAST(CASE dp.state WHEN N'G' THEN 1 WHEN 'W' THEN 1 ELSE 0 END AS bit) AS [HasDBAccess],

u.create_date AS [CreateDate]

FROM

sys.database_principals AS u

LEFT OUTER JOIN sys.database_permissions AS dp ON dp.grantee_principal_id = u.principal_id and dp.type = N'CO'

WHERE

(u.type in ('U', 'S', 'G', 'C', 'K'))

and name not in ('sys','dbo','guest','INFORMATION_SCHEMA')

ORDER BY

[Name] ASC

|

| DLM.EXE | 9/19/2008 8:56:05 AM |

This post could be titled as:

- Moron

- Don't Jump to conclusions

- Research things appropriately

- Think

Recently I experienced a machine that was running under some cpu pressure. Seeing that it was QA and during a load test, we were concerned with what was taking place on the machine. The machine was running 20-30%, not bad, but sqlservr.exe was only using 10%. Tracking down what else was taking place on the machine was not easy.

I focused in on DLM.EXE, wow, whats this...well being a moron, not thinking, jumping to conclusions and doing improper research....caused this....

A quick search in google, showed that DLM.exe shouldn't really be running and is often used in viruses etc, so I killed it, than of course, the machine immediately crashed, crap. Like I said, this was QA and was meant to be the playground, but regardless it's embarrasing to have a machine crash during a load test.

Now the light bulb goes off...., duh this is "Polyserve", DLM.EXE has absoultely zero to do with anything found on that google search. DLM = Distributed Lock Manager, and is the mechanism polyserve uses to control access to the clustered file system. DLM.exe was running high because the cluster was experiencing large amounts of i/o across many servers due to the fact that we were running the load test during the maintenance windows of the servers (dbcc's, backups, reindexes etc).

The machine crashed because DLM.exe is part of the polyserve service, killing it caused the file system to become unstable, so the server was "fenced" from the clustered environment, exactly as Polyserve is supposed to do. Nice to see that Polyserve worked well, even when the operator is not. |

| Installing SQL Server 2005 on a Cluster | 9/26/2008 1:57:48 PM |

| Moving Blobs | 9/12/2008 3:29:35 PM |

Moving blobs to a dedicated file group, maybe with several data files, is a very good idea ! Of course, if you wait to do this until your database grows quite large, you've got a problem. I define large as 50+ gb.

Currently I have an issue with a 120gb database. 100gb of the data is in one table, with an image data type. I'd like to isolate this tables blob data to a dedicated file group, made up of 4 data files. This is not difficult, what is difficult is making it happen.

I've experimented with doing this 3 ways:

1. SQL Management Studio - 28 hours.

2. SSIS - 18 hours.

3. Custom TSQL Scripts, 4 spids, dividng the table up, 12 hours.

I have found all of them to be very painfully slow. So slow in fact that I'm not able to do it, as I currently can't take a 12+ hour outage.

Knowing your volumetrics and design up front is very important for this exact reason.

SQL Server 2005 can do this quicker with parrallel loads, if the table is partitioned, but this is a 3rd party database from a vendor and can't support partioned tables.

SQL Server 2008 provides some new options for storing the blobs outside the database, which is always my preference if possible.

Below is the script for option 3, handy sometimes:

use emailxaminer_run

go

/*

select min(fileid),max(fileid) from rt_filecache

-- calculations to figure out each spid to run.

1. 980,066 to 7,553,806 start = min(fileid)

2. 7,553,807 to 14,127,547

3. 14,127,548 to 20,701,288

4. 20,701,289 to 27,275,029 end = max(fileid)

select 27275029 - 980066

select 26294963 / 4 --6573740

-- thread 1

select 980066 + 6573740 -- 7553806

select 14127548 + 6573740

*/

declare @min int

declare @max int

Declare @startPosition int

declare @endPosition int

declare @currentPosition int

set @startPosition = 980066

set @endposition = 30000000

set @currentPosition = @startPosition

declare @increment int

set @increment = 1000

While @currentPosition < @EndPosition

Begin

begin tran

INSERT INTO dbo.Tmp_RT_FileCache (FileID, MessageId, FileType, FileSize, datelastmod, FileName, FileBLOB)

SELECT FileID, MessageId, FileType, FileSize, datelastmod,

FileName, FileBLOB

FROM dbo.RT_FileCache with (nolock)

Where FileId Between @currentPosition AND

(Case WHEN @currentPosition + @increment > @endPosition then @endPosition

Else @currentPosition + @increment END)

commit tran

Print 'Where Clause = ' + convert(varchar(10),@currentPosition) + ' AND ' +

Convert(varchar(10), (Case WHEN @currentPosition + @increment > @endPosition then @endPosition

Else @currentPosition + @increment END))

Set @currentPosition =

Case WHEN @currentPosition + @increment > @endPosition then @endPosition

Else @currentPosition + @increment + 1 END

--Print @currentPosition

End |

| Netbackup fails with more 64 Mount Points | 9/25/2008 8:55:45 AM |

Discovered an issue with netbackup failing when there are more than 64 mount points on a system.

A Patch is to be delivered.

Workaround implemented:

ISSUE:

GENERAL ERROR: bpbkar32 can experience a Application popup runtime error when attempting to backup a Polyserve system with more than 64 mount points.

ERROR CODE/MESSAGE:

Status 41 - network connection timed out

ENVIRONMENT/CONDITIONS:

After introducing over 64 mount points onto a NetBackup client, you will experience a limitation.

EVIDENCE:

Event Viewer:

20070228 13:03:52 Application Popup I26 NA Application popup: Microsoft Visual C++ Runtime Library : Runtime Error!

Program: C:\Program Files\VERITAS\NetBackup\bin\bpbkar32.exe This application has requested the Runtime to terminate it in an unusual way. Please contact the application's support team for more information.

SOLUTION/WORKAROUND:

ETA of Fix:

Symantec Corporation has acknowledged that the above-mentioned issue is present in the current version(s) of the product(s) mentioned at the end of this article. Symantec Corporation is committed to product quality and satisfied customers.

This issue has already been correct in 6.5GA and 6.0 MP6. However it is currently being considered by Symantec Corporation to be addressed in a forthcoming Maintenance Pack of 5.1. Please note that Symantec Corporation reserves the right to remove any fix from the targeted release if it does not pass quality assurance tests or introduces new risks to overall code stability. Symantec's plans are subject to change and any action taken by you based on the above information or your reliance upon the above information is made at your own risk. Please refer to the maintenance pack readme or contact NetBackup Enterprise Support to confirm this issue (ET1039189) was included in the maintenance pack.

As future maintenance packs are released, please visit the following link for download and readme information:

http://www.symantec.com/enterprise/support/downloads.jsp?pid=15143

WORKAROUND:

Force NetBackup to use BackupRead() API by performing the following changes: (This will not work if you are using Flashbackup to backup the data)

1. Click Start | Run, type regedit, and click OK

Warning: Incorrect use of the Windows registry editor may prevent the operating system from functioning properly. Great care should be taken when making changes to a Windows registry. Registry modifications should only be carried-out by persons experienced in the use of the registry editor application. It is recommended that a complete backup of the registry and workstation be made prior to making any registry changes.

2. Browse to HKEY_LOCAL_MACHINE\Software\VERITAS\NetBackup\CurrentVersion\Config

3. In the Config registry key, create a new key called NTIO

4. In the NTIO registry key, create a REG_DWORD value, give it the name UseNTIO, and the value 0 (zero)

|

| Polyserve 3.6.1 upgrade | 9/11/2008 2:06:44 PM |

Evaluation of the Polyserve 3.6.1 is underway.

Recently completed the upgrade of our Development cluster. All went smooth.

This is another one of those difficult upgrades that requires multiple outages (though small). First you have to un-install the software and then install the new software. So this causes an outage. The File systems also have to be upgraded, so you have to stop using each file system to upgrade it, this causes another outage.

So far things have been quick and no issues.

Definetly had we not completed the 3.4 to 3.6 upgrade, this would have been very difficult, as the 3.4 to 3.6 upgrade was more complex.

No word yet on Polyserve support for sql server 2008. Though based on the sparse and ADS (Alternative Data Streams) file system options in 3.6.1 they are very close, and I'd bet you will have to be on 3.6.1 as both the sparse and ADS seem to be necessary for sql server 2008. |

| Script to Clone or Compare SQL Server users ? | 9/4/2008 3:51:52 PM |

How often do you receive requests to add a user and make their permissions the same as Joe's ?

How often do you work a problem where something works for {user a} but not {user b} and you need to compare their permissions, to elminate that as the problem.

Rick Mcintosh, developed a superb script to clone a user, which can easily be enhanced to compare two users as well (using exists).

Use at your own risk, so far in our testing it has been great, go to my script vault to get the latest version, under Logins - Clone, http://www.lifeasbob.com/Code/ScriptVault.aspx |

| SQL Instance will not fail back to primary | 9/15/2008 11:06:17 AM |

Better known as, "Polyserve is puking and you get to clean it up !". Technincally it's not fair to blame Polyserve, as the root cause of this issue looks to be a corrupt registry from version 3.4 that was never properly corrected. We've never experienced an issue like this with SQL Instances installed from version 3.6 originally.

When you have a SQL Server instance in Polyserve that will not fail back to it's primary, you know you have a problem. Best bet, call technincal support [that's what maintenance is for!], but here is what happenned to us recently and what we did to correct this issue [we have seen this before and called technical support and concurred this is the permanent fix].

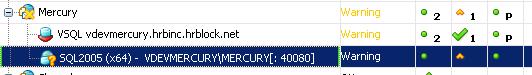

Automated patching of the development environment caused some sql instances to fail over, this is expected. We do not run the instances in "auto fail back" mode, preferring to complete this step manually to minimize "ping-ponging" instances. After patching we reviewed the environment, and it looks good, with the excpetion of one instance, it is on it's secondary, it is running, it is available, but notice the status of "warning".

There is no nice error message in the console. Right clicking the instance to "show alerts", displays nothing. What gives ?

Who cares right, just move the instance back to the primary and let's get working....no dice. The instance won't move, and NO Error or Message is given, crap, you know your in trouble now....for the newly Polyserve initiated, this is when you STOP and call technical support. The more you play with things, the worse it will get and it will cause technincal support consternation in correcting the issue. Since this was development I get to play...

The instance will not fail back, if we rehost, that result is no change. Disabling the instance is the same result. I'm pretty sure you could reboot the server and that would cause it to fail back to the primary, matter of fact, i'm positive....but what if you have other instances on that same physical server and you can't afford an outage ? Also that may temporarily correct the issue, but it doesn't address the root cause and future scenarios of patching or fail over, may result in the same condition.

What the hell is happenning ?

Finally digging through all the logs (including Polyserve logs), i find that Polyserve still thinks the instance is "starting", and since it is in "starting mode", it does not fail it over when requested. There needs to be someway of over-riding this stupid behavior but it is "by design". See pic:

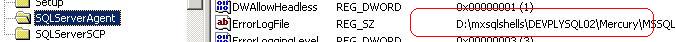

What and why ? Based on past experience and knowing this instance existed from version 3.4 to 3.6, I know this deals with the registry issues. A quick peak of the registry on the server it is currently hosted on shows that some of the registry entries point to the virtual root (3.6) and some of the registry entries point to mxshells (3.4), notice the registry entries below, they should all point to the virtual root:

Now the correct way to fix this is to delete the sql instance from Polyserve (not the machines). Verify the registry entries and sql instance on each machine. Delete any polyserve sql.original, sql.preg etc files (make a copy first). Re-virtualize it and re-verify everything.

Obviously if this is production instance, you might have to wait in doing this, as it is time consuming. In which case you can manually stop the services and see if you can get things to fail back, though you may have to reboot the server. At some point, the only way to correct the root cause is find a maintenance window to allow you to delete the instance from polyserve, correct each individual sql instance and re-virtualize. Fun ! |

| SQLPrepare - SQLException Invalid Handle | 9/10/2008 11:01:08 AM |

Could not find prepared statement with handle 2.

This was a very odd and difficult item to track down and fix. While working with a production migration of an application to sql server 2005, the application did not work properly. Of course a 3rd party product, error message from client was ambiguous at best, but obviously something wasn't working.

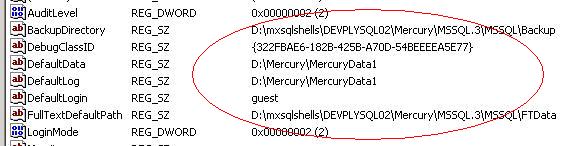

Upon running a sql profiler to try and capture the error message, success, profiler did capture an error (a true shame the front end client doesn't capture the error, as it is being returned to the client, another example of truly great programming!):

Error: 8179, Severity: 16, State: 2

Could not find prepared statement with handle 2.

A Quick review of this error causes me to be slightly dumb founded, as the error is not happenning in QA. The vendor suggests changing the SQL Server Services to run under the same service account as the application, not sure where that suggestion comes from, but I politely take it under advisement, while privately thinking that i'm dealing with someone who is more clue-less than me.

I've seen some isolated instances where odd errors happen with SQL Cumulative updates, and this instance is on cumulative update 6, so I begin to wonder if it is an issue with the cumulative update, but that doesn't hold up as QA is also on cu 6. I've also seen issues with the sp_execute and handles having issues due to client resets and the client MDAC stack, I decide to research this a bit.

Finally I find a KB Article on a similiar subject, not exact, but close enough to try, http://support.microsoft.com/kb/913765/en-us. FIX: An SqlException exception may occur when you call one of the Execute methods on the instance of the SqlCommand class in an ADO.NET 2.0 application.

I ask the vendor about their data access layer, versions of ado.net and patches. The vendor response is less than enlighting and only re-enforces my belief that i'm dealing with less than steller support. Remaining composed is critical, as you can't insult the vendor, we're using their product and I'm in the middle of production upgrade that is melting down, with the business waiting...must keep composed and concentrate on the issue....a few choice explicitives are released, thankfully the mute button works properly.

Now why the DBA has to troubleshoot the data access layer of the client, I don't know, but it never fails that it does come down to this.... The DBA has to be one of the most knowledgeable subject matter experts, and not just of the database, but the entire domain that is involved with the application.

Short story ends, patch above is applied, to the client, and all works. What a shock. |

|

Blog Home

|

|

|

|